Application Drives Formalism: Why Machine Learning is Booming and Three Bags of Tricks

The field of machine learning is sufficiently young that it is still rapidly expanding, often by inventing new formalizations of machine-learning problems driven by practical applications.

Mike Jordan, Statistical Machine Learning Professor from UC Berkeley, in a recent survey of supervised, unsupervised, and reinforcement learning paradigms

Machine Learning techniques are applicable to a rapidly growing set of important problems. We have an equally rapid growth of available data to power these applications. Our constant push to invent new formalisms driven by improved performance on practical problems yields the increasingly creative composition of the different learning paradigms that Jordan outlines. Taken together, these motivating factors are driving a boom in novel machine learning research.

These forces are leading to a boom in both exploring new approaches and refining older approaches that haven’t been practicable until more recently. For example, neural networks have been around since the 1950s, and deep neural networks have been around since the 1980s. Despite their long history, deep nets have only taken off more recently as the result of a confluence of multiple trends — large available data sets with new hardware (e.g., NVIDIA and Nervana) and approaches to train the networks faster.

Deep learning papers represented only ~0.15% of computer science papers in arXiv published in early 2012 but grew ~10X to ~1.4% by the end of 2014. In 2016, 80% of papers at many top NLP conferences are deep learning papers. Deep nets are now demonstrating state of the art results across applications in computer vision, speech, NLP, bioinformatics, and a growing list of other domains.

Although deep nets are currently non-interpretable, we’ve seen interesting work in progress to compose deep nets with classical symbolic AI to treat logic programs as a prior structure on the network. This approach may allow deep nets to learn logic programs that ultimately yield new scientific insights in a range of fields. As Mike Jordan mentions in the quote opening this post, Machine Learning often evolves rapidly when we invent new formalisms driven by insights from practical applications. As deep nets continue to deliver state of the art results for various tasks, efforts to invent new formalisms is an area of focus much like the efforts to understand the statistical properties of boosting lead to deeper understanding of regularization in the mid-2000s.

Machine learning researchers focusing on applications at scale have developed a rich set of techniques for featurizing massive amounts of heterogeneous data to feed into well-worn logistic regression and boosted decision tree models. Since this approach is robust and very fast in production, the “rich features with simple models” approach powers many of the largest systems we use every day, like Facebook newsfeed ranking.

We’ve developed some very clever tricks over the years to turn very hard problems into simpler ones. One important set of such tricks involves techniques like Variational Methods, which formulate intractable problems as approximate convex optimization problems, and then apply well understood optimization algorithms which yield good performance and often have fast parallel and streaming variants.

A second set of sneaky tricks include methods like distant supervision, self training, or weak supervision, for starting with an insufficient dataset and incrementally bootstrapping your way into sufficient data for supervised learning. Using these methods, you may or may not have some labeled data, you definitely have a bunch of unlabeled data, and you have a ‘function’ (read: a dirty yet clever hack) that assigns noisy labels to the unlabeled data — once you have lots of data with noisy labels, you’ve turned the problem into vanilla supervised learning.

A third bag of tricks arise from the method of Transfer Learning — applying knowledge learned from one problem to a different but related problem. Transfer learning is especially exciting because we can learn from one data-rich domain with a totally different feature space and data distribution, and apply those learnings to bootstrap another domain where we may have much less data to work with.

Related Companies

Related Content

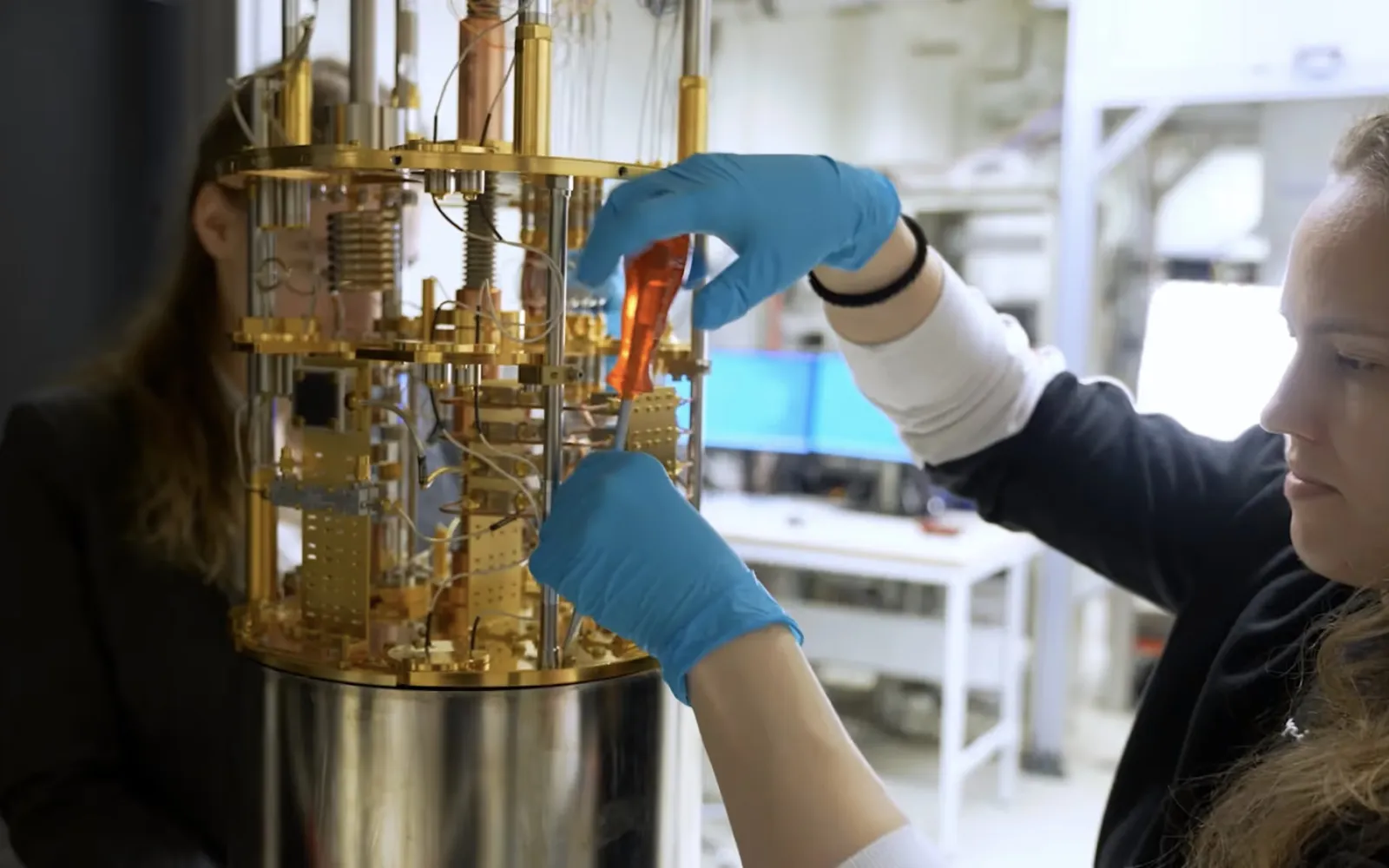

DCVC DTOR 2024: With quantum computing, we invest in the picks and shovels

Odyssey is delivering powerful new 3D generative AI tools to Hollywood

IBM builds Q-CTRL’s error-suppression software into its quantum computing service